Tesla Autopilot 8 is finally here. I was lucky enough to get a sneak peek at the world’s most famous Beta software and test it around New York City, and my first impressions suggest my earlier predictions were fairly spot-on. Autopilot 8 is a modest step forward in user interface and functionality, but a major step forward in safety and effectiveness.

The obvious changes are cosmetic, but the biggest change—improved radar signal processing—won’t become apparent for weeks or months, after which the breadth of improvements should be incontrovertible.

Fleet Learning Is Everything

The release of Autopilot 8 within 48 hours of the DOT’s new guidelines highlights the growing chasm between Tesla’s Level 2 semi-Autonomous suite and rivals’ deliberate pause at Advanced Driver Assistance Systems, or ADAS.

While legacy OEMs hope to reach, via localized testing, Level 4 autonomy within 3 to 5 years, Tesla’s combination of Fleet Learning and OTA updates has yielded more (and more significant) software improvements in the 11 months since Autopilot’s initial release than most manufacturers achieve in a traditional 3-5 year model cycle.

If and when the DOT’s guidelines are passed anywhere near their current form, Tesla will meet them via one or more wireless updates. The OEMs? Field updates via USB, maybe? That didn’t work for FCA. Going to dealers? A lot of people don’t check their mail. Or e-mail. Or want to go back to dealers.

Whatever George Hotz’s differences with Elon Musk, Comma.ai and Tesla have more in common with each other than not. The power of crowdsourcing and rapid software improvement cycles cannot be denied. This is the crux of the problem the OEMs face. The OEMs, firewalled and hobbled by dealer franchise agreements prohibiting wireless updates, are in danger of letting Tesla, Comma.ai, and the like reach Level 4 first, on the shoulders of crowdsourced data generated by networked Level 2/3 systems. You know, systems like Autopilot 8.

But I digress. Let’s take a first look at some of the most important changes in Autopilot 8, based on 48 hours of testing in and around Manhattan:

Involuntary Disengagements & Adoption Of Aviation Automation Lockouts

As Elon Musk pointed out in a September 11th conference call, the majority of incidents with Autopilot have occurred with veteran users growing overconfident in the system. Such users would deliberately ignore multiple disengagement warnings over short periods, then re-engage the system as soon as possible, repeating a cycle that might eventually lead to a crash.

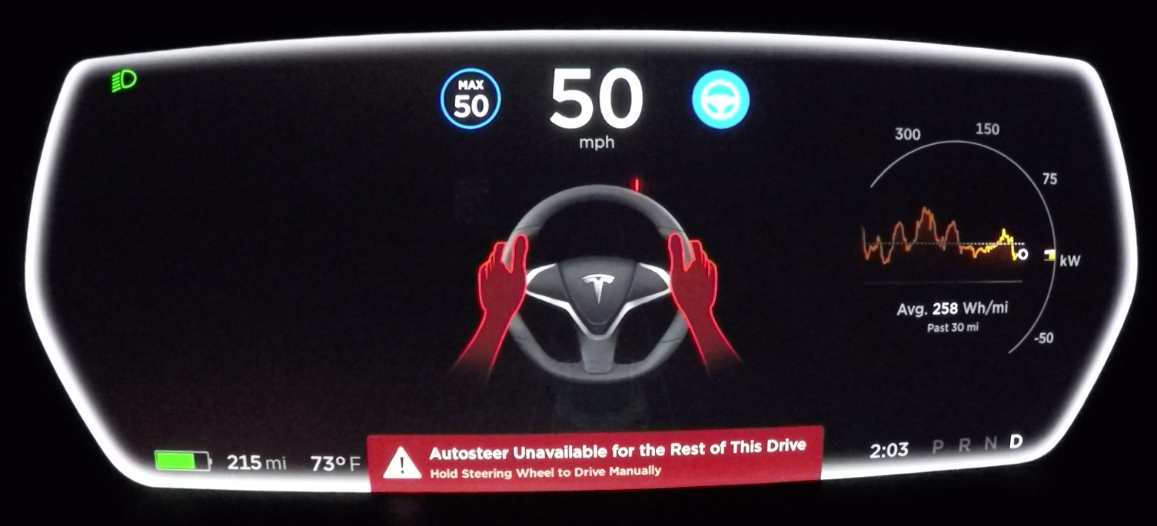

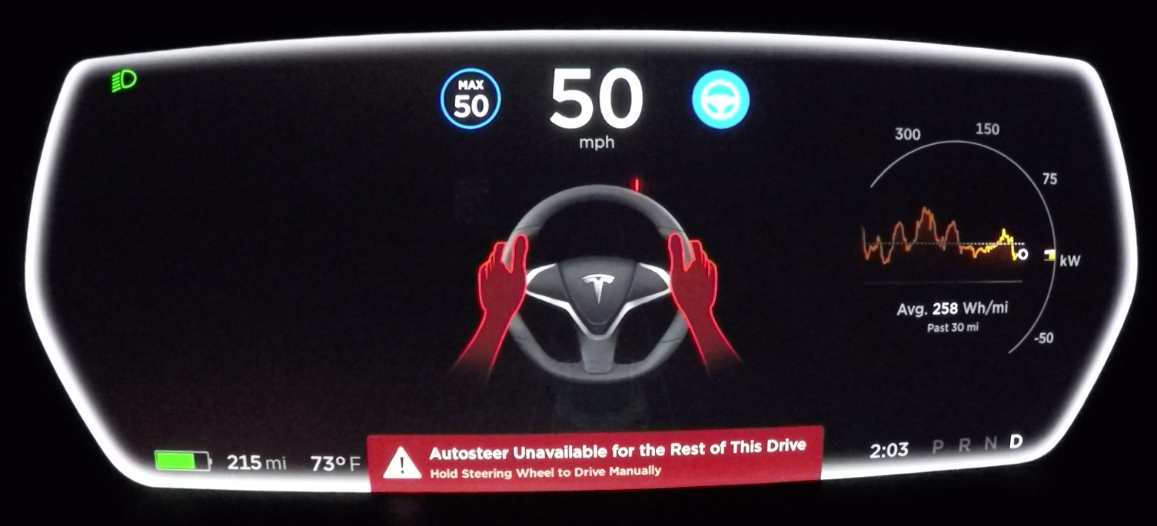

Autopilot 8 stops this potentially dangerous cycle by locking out Autosteer if the user ignores three warnings within one hour. Want to reset the system? You have to pull over and put your Tesla in Park.

This is similar to Airbus automation lockouts. Under certain circumstances, if Airbus Automation disengages—dropping from what’s called “Normal Law” (the highest state of automation) to “Alternate Law” (an intermediate state)—Normal Law cannot be re-engaged until landing.

Here’s the first warning, with an all new white pulse effect around the cluster’s edge:

And the second:

And the final lockout alert, which is accompanied by a LOUD audible alert:

This got my attention. But it still could have been louder.

All in all, this was a critical and necessary change to Tesla’s system, and will undoubtedly become standard on all semi-autonomous systems.

The Promise of Improved Radar Processing

Musk wrote a detailed blog post explaining how this works, along with its myriad benefits, but the primary improvement will come from a geo-located whitelist generated via Fleet Learning. The data gathered by users, whether or not Autopilot is engaged, will distinguish whether a large metal object ahead is an overhead sign, which can be ignored, or a truck crossing perpendicular to a Tesla’s path, which cannot. As Tesla owners crowdsource an increasingly broad radar map, events like the Joshua Brown crash (and false breaking events) will become proportionally less likely.

As autonomous guru Brad Templeton pointed out, there are really only two ways to mitigate the likelihood of another incident like that in the case of Joshua Brown: installing one or more costly LIDAR sensors, or the cheaper option of improving upon the fairly primitive radar signal processing of current systems.

While everyone else waits for LIDAR to get smaller and cheaper, we know what Musk chose.

We’ll see how quickly the whitelist data propagates back to Teslas in the field. With 3+ million miles of driving data being gathered daily, it shouldn’t be more than a few months. I’ll report back in early November the next time I take a Model S on a lap of Manhattan, when I do a false braking event comparo, then versus now. (I hope.)

Improved Radar Processing & Situational Awareness

The improved signal processing also means the system can see two cars ahead, which should mitigate a problem allegedly depicted in several dashcam videos, most recently the January crash in China that, arguably, may or may not have occurred on Autopilot.

If Autopilot 7 was engaged while behind a vehicle and that vehicle moved out of the lane ahead, there remained the possibility of striking, say, a car stopped in the same lane. In Autopilot 8, that likelihood would appear to be greatly reduced, because we can see two cars stacked. To wit:

Here’s a lane on the right I wouldn’t want to move into:

Now here’s a truck PLUS two cars on the right:

With or without Autopilot, I’d want to know there’s a second car up ahead on the right. If I chose to pass that truck, and the nearer car made a 90-degree right, and I assumed the right lane was now open, something between frustration and an accident would have been likely.

Autopilot 8 prevents that.

Well done, Tesla. This makes for safer driving even when I’m in full control. Won’t any other company at least put a toe into this water so we can compare alternative visions of how to do this? This level of situational awareness doesn’t require any level of autonomy to make driving safer.

The Relationship Between Information, Data, Confidence & Trust

The improved radar signal processing delivers more than just additional data in the gauge cluster. These extra cars…

…are just a symbol of what the system actually conveys, which is information. A lot of information.

One car is ahead is data. Two cars ahead? Information. Data is not information. Information is data that is actionable. Action? Action requires confidence.

If I engage Autopilot based on seeing one car ahead, and then other cars appear, or lane markings disappear, and now the system disengages or leads me astray and I must voluntarily disengage it, my work is increased, and so is my stress. The longer Autopilot remains engaged or disengaged—the longer a consistent state of driver involvement (or monitoring) is maintained—the lower my workload, and my stress.

Additional layers of data coalesce to form information, allowing for higher confidence levels, which aids in deciding whether to engage Autopilot at all. This is the essence of situational awareness. It allows the user to decide whether or not to use a tool, and once in use, to better gauge whether or not to continue in its use.

I know what my eyes see. Or at least, I think I know. If the car can see as much as I can—or more than I can—my confidence in engaging Autopilot will be moderate to high. If the car sees less than I can, then my confidence will be low, and I won’t attempt to engage it. If it is already engaged, I will likely choose to disengage it.

Data. Information. Actionability. Confidence. Work reduction. Stress reduction. Trust.

I will never trust a system that tells me less than my intellect (and survival instinct) requires.

Autopilot 8 is an important step in closing the gap between information and trust. It’s a long continuum between the two, but one that is a little shorter because of Tesla’s work here.

Will anyone trust a Level 4 car that doesn’t tell the driver/passenger something? Trust in Level 4 (or even 3) will require, at least for me, a long period of learning how the car sees, thinks and responds. For my generation, who grew up in total and then partial control of cars, that level of trust isn’t going to fall from the sky.

That level of trust starts here and now, with the first decent stab at showing me what a car can see with even the limited sensor inputs of commercially affordable hardware. If I can safely act upon it, then maybe a car can, too. If I can’t, Level 4 isn’t going to be part of my life.

The kind of people who blindly trust in autonomy at any level are the same people who blindly trust their own driving skill. That’s why we have 38,000 deaths a year, 99 percent of them from human error.

There’s everything to be learned by watching Tesla attack these unknowns in the light of public scrutiny, and everything—including 1.2M lives a year, globally—to be lost by trying to answer these question behind closed doors.

TO BE CONTINUED IN PART 2

Tomorrow we’ll continue my first test of Autopilot 8, and look at Improved Lane Changing, New Alerts, Dynamic Maps, Mode Confusion, Brake Regeneration Changes, and the BIG one, Curve & Fleet Speed Adaptation, which I think are biggest improvements, and hiding in plain sight.

I’ve got a lot to say, but Tesla has delivered a lot to think and talk about. I look forward to discussing it in the comments.

[CORRECTION: This article was corrected to reflect the unintended inversion of the terms “data” and “information” as well as confusion between the term Autopilot and Automation.]

Alex Roy is an Editor-at-Large for The Drive, author of The Driver, and set the 2007 Transcontinental “Cannonball Run” Record in 31 hours & 4 minutes. You may follow him on Facebook, Twitter and Instagram.