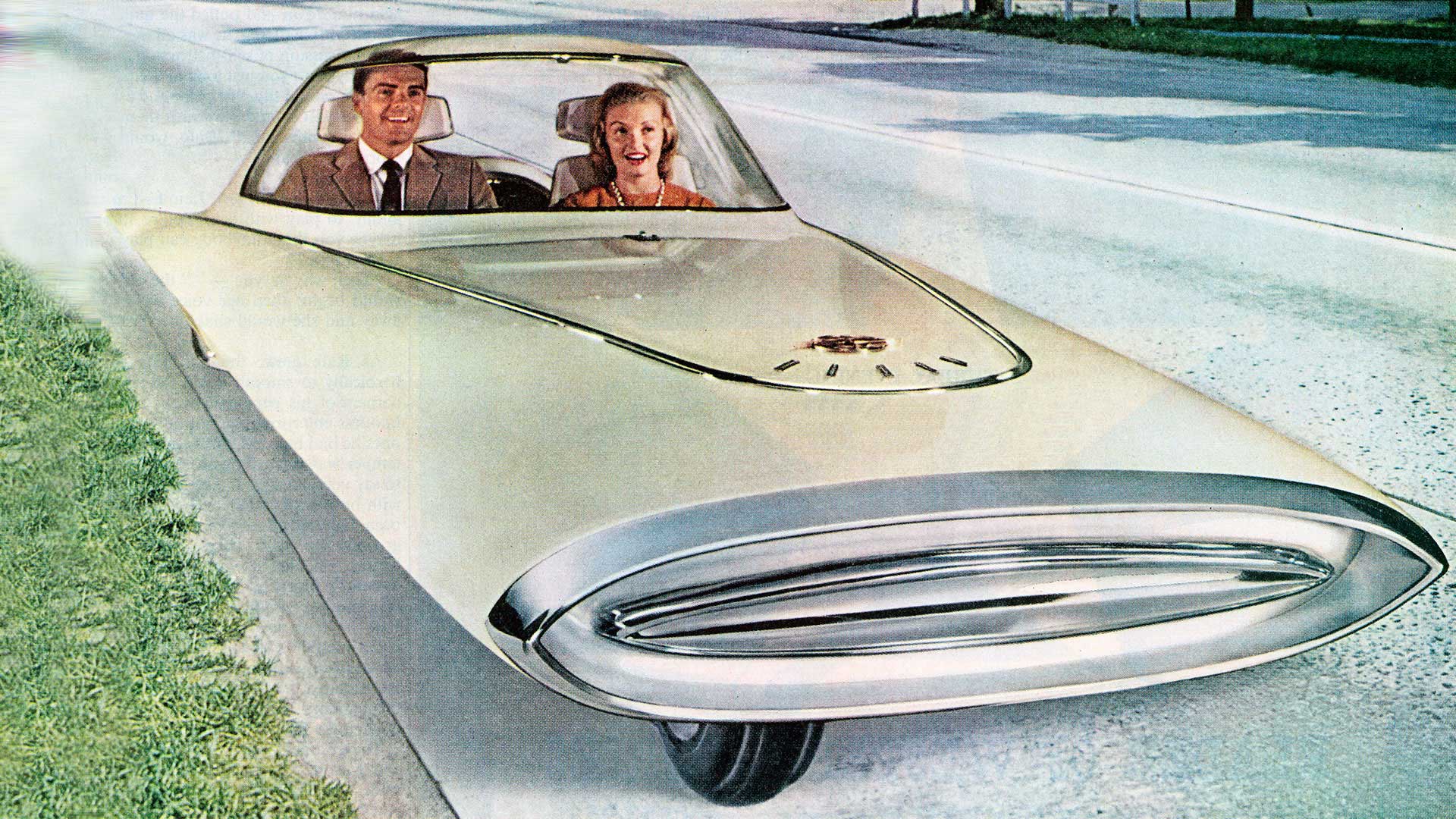

Q: When am I getting my self-driving car? – Some Dude on the Internet

A: Despite a thick carpet-bombing of verbiage that makes it seem that self-driving cars are mere weeks away, no one really knows. Google doesn’t know. Apple doesn’t know. Tesla doesn’t know. And every tech writer in Silicon Valley worth their San Jose State Poli Sci degree doesn’t know, either. Me? I’ll take a guess.

Despite the ceaseless stream of pie-in-the-sky predictions that self-driving cars are coming soon, developing a car that can handle all sorts of driving situations is really tough. Besides the fact that every once in a while a beer truck will drop a keg of Coors Light in the path of a self-driving car, there’s the matter of leaving life and death decisions up to machines.

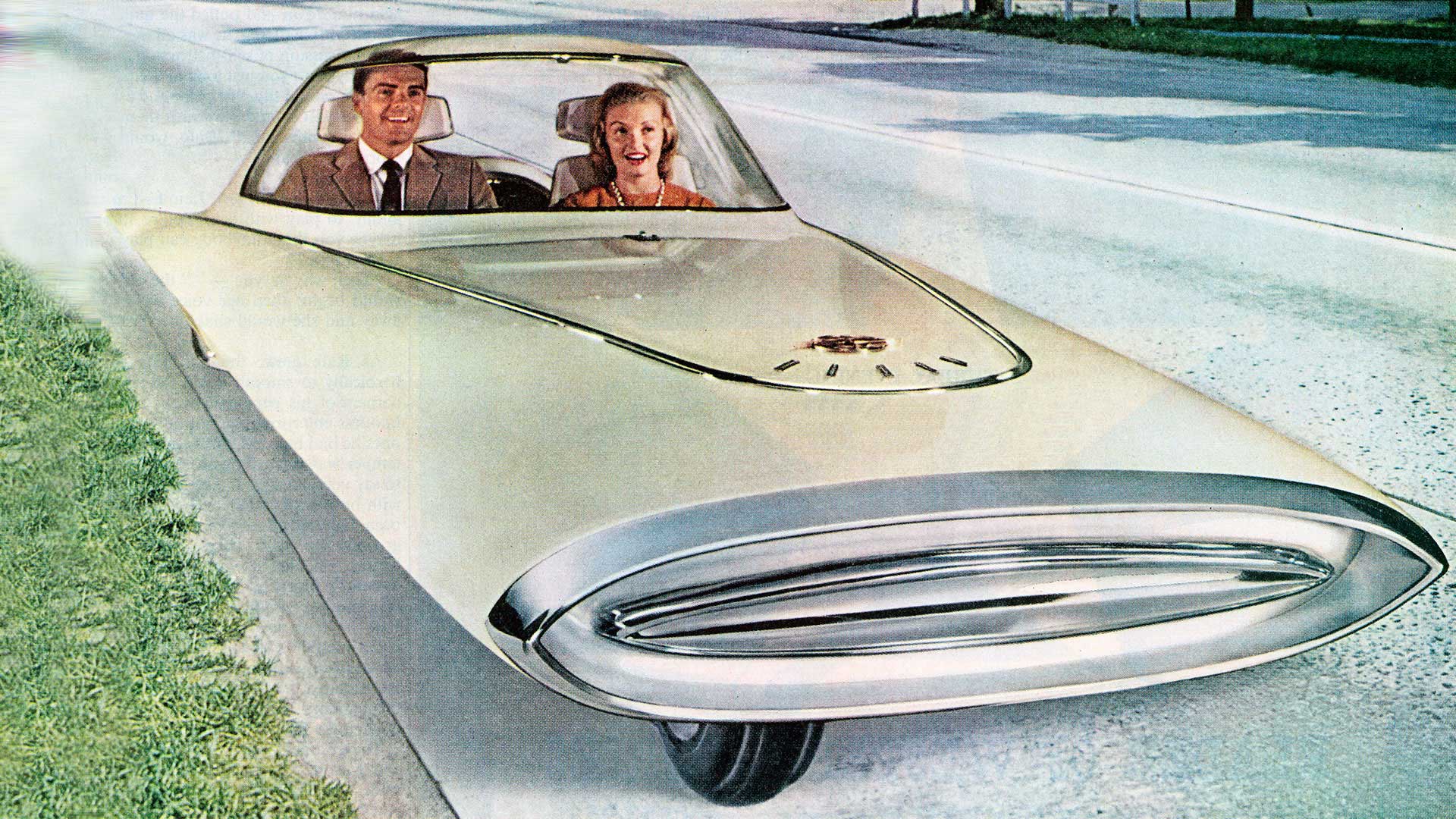

Imagine this: After pulling an eyelid muscle during the morning’s session of cyber pilates, a Palo Alto mom doesn’t want to get out of the massage lounger to take her toddlers to the Montessori advanced coding class. So instead she decides to put little Timmy and Tammy into an autonomous Google-mobile and send them off on their own. And there goes the robo-car merrily down Waverly Street with no one aboard who could competently take control in an emergency.

But there is an emergency. The car suddenly has to decide whether to hit the big dog that just bolted out in front of the car from between parked cars or swerve into the other lane and be hit head on by a garbage truck. Then again maybe the algorithm in charge will decide to slam on the brakes and get rear-ended by the tailgating luxury bus filled with Facebook engineers commuting in from San Francisco. Or the car can decide to hit a parked car and hope (if cars can hope) the unattended kids that are aboard are belted in and the airbags will work.

By most standards—sorry SPCA—the moral choice is to hit the dog. But to reach that decision the car’s computer has to recognize that that’s a dog and not a human being. And then decide that hitting the dog is likely to produce a better outcome than the kids aboard dying in a head-on or rear-end collision. Then it turns out it wasn’t a dog but two kids in a Great Dane suit. Hey, stranger things have happened. So maybe the best thing to do was hit a parked car. And the lawsuits start flying.

Human beings are generally pretty good at telling the difference between real dogs and two kids in a dog suit, and computers may or may not be. And while there’s no guarantee that a human driver may have made the better, more moral choice, at least there’s that chance. Who is responsible when a computer kills someone? My iMac crashed yesterday, but it was parked.

Who is responsible when a computer kills someone? My iMac crashed yesterday, but it was parked.

And yet even with the infinite stupidities and surprises that autonomous cars will face, those technical and moral challenges will likely soon be overcome to at least the level that will allow low-speed autonomous vehicles to move through low-density urban situations. And from there they’ll grow progressively more competent and expand into more and more or the whole transportation eco-system. My guess is that by 2020 the first autonomous cars will be released in limited numbers to the general public.

I have no rational basis for my prediction. But that’s never stopped me before and it’s not stopping me now.

That’s my guess, and autonomous vehicles will surely be a boon to some people. Particularly for people who, for whatever reason, can’t drive themselves. But it’s going to be a long time—decades—before the systems are so foolproof that you can send your four-wheeled robot to take Fido to the dog park by itself. Or pick up the groceries on its own at Whole Foods.

Google and Apple and every car company are placing their bets right now on autonomous cars. They’re big bets. Meanwhile, I lost all my gambling money last week playing the $2/$3 No Limit Hold ‘Em at the Player’s Club Casino in Ventura.