At Simon Fraser University’s Autonomy Lab, researchers have been hard at work at developing drones that can be piloted exclusively through the user’s facial expressions. This hands-free system is, of course, still very much in the early stages, but SFU’s roboticists have made some pretty serious headway. According to IEEE Spectrum, the unmanned aerial vehicle (UAV) first requires some user input—the drone needs to learn which facial gestures to tie to which commands, after all. This input phase comprises the first of three stages of this “hands-free face based” human-robotic interaction. The goal is to keep it simple so that the project’s “Ready-Aim-Fly” simplicity runs as smoothly as possible.

“Ready” is all about tying the user’s identity to the drone by mapping certain facial expressions to particular UAV commands. Of course, any system that responds to strong gestures also requires a neutral point it can consider its foundation, which is why the first step has the user stare as expressionless as possible into the drone’s camera. Once the UAV has logged and saved that particular expression, it’s time to log a “trigger” face—one that is distinct from the neutral expression. This will reportedly come in handy later on when it’s actually time to fly and navigate the UAV.

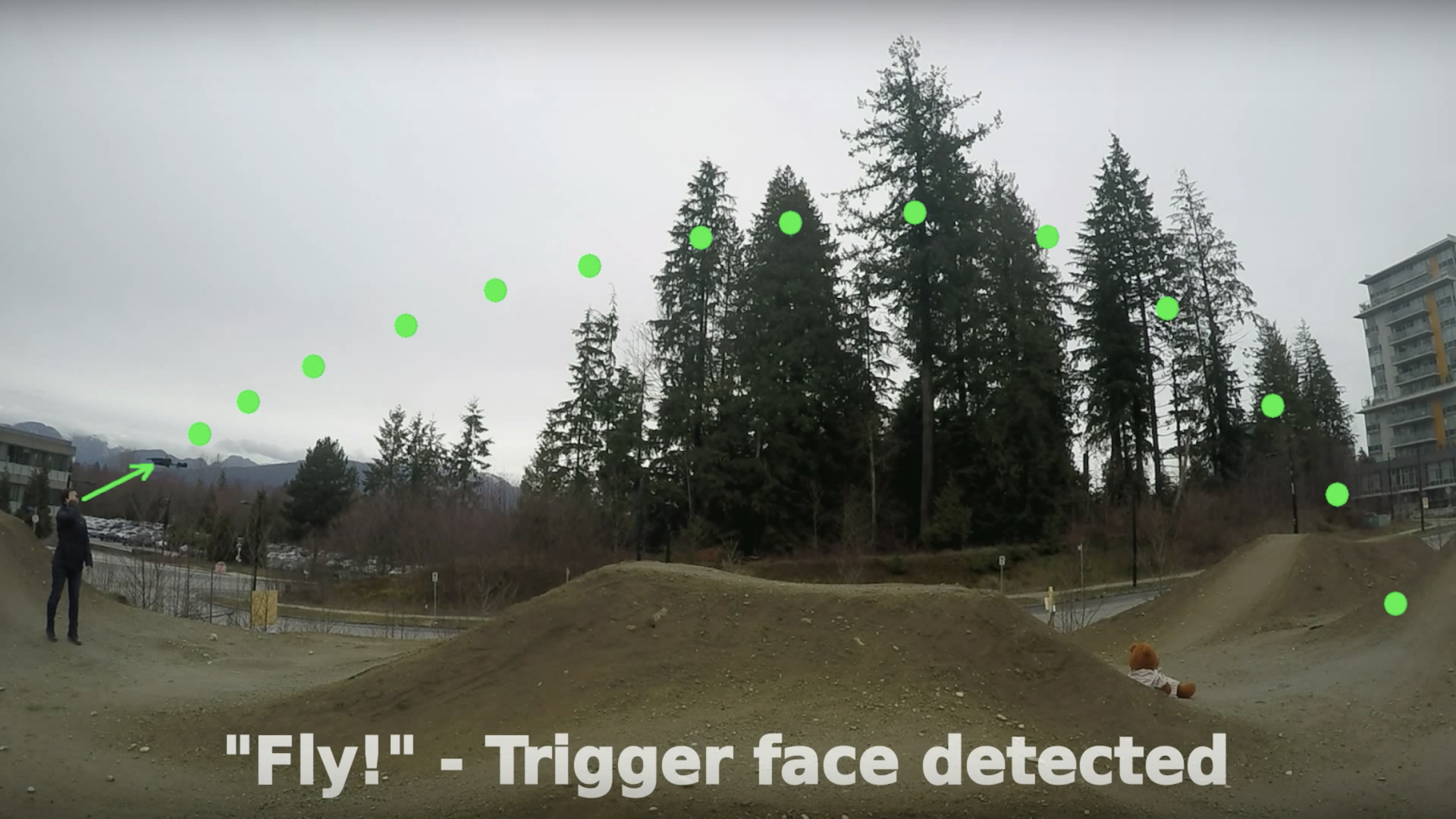

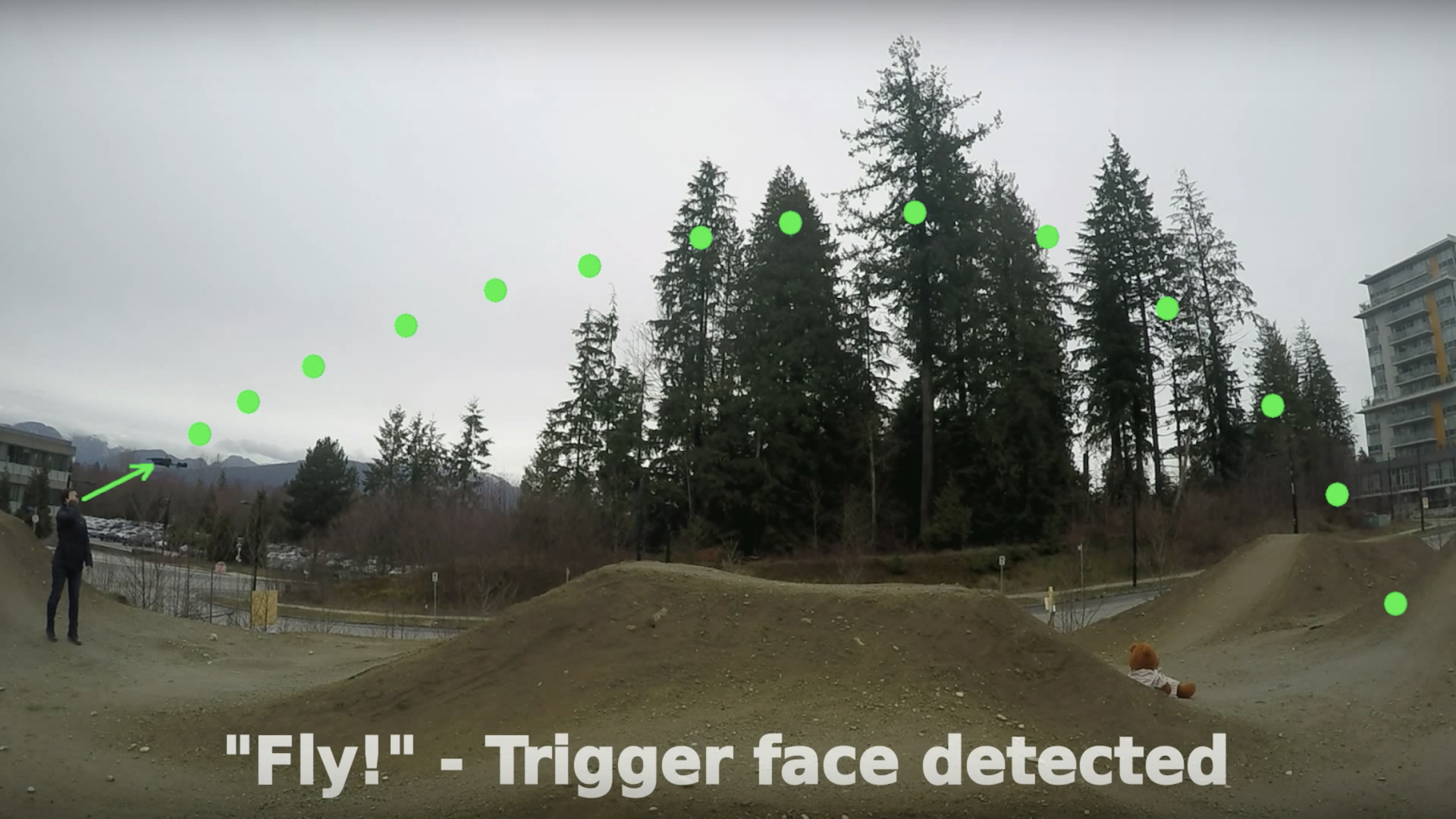

Phase two, “Aim,” will have the drone hovering in front of the user and awaiting their input—we’re not quite there, but actual flight is near. Picture this: you place the drone in front of you with the camera facing your direction. Once it takes off and hovers in place—keeping you centered in its view—picture an imaginary rubber band tied to both you and your UAV. This stretched invisible rope of sorts will only increase in kinetic energy the further you walk away, backward, from the drone. Finally, the “Fly” portion can begin, and your logged “trigger” face can come into actual, functional use. Pull your “trigger” face, and the drone takes off away from you with a strength dependent on the distance you walked away from it during the “Aim” phase. In other words, the more you stretched that imaginary rubber band, the harder the UAV will fly off once you pull the trigger. The route will be ballistic in its trajectory and return to its user when finished.

Let’s take a closer look at this whole process, courtesy of Simon Fraser University.

According to IEEE Spectrum, the “Ready-Aim-Fly” system here also allows for “beam” and “boomerang” trajectories, the former of which is a straight shot, with the latter flying, well, like a boomerang. The UAV used in the development of this hands-free face-based research project is a somewhat modified Parrot Bebop drone, due to the need for an LED strip which is responsible for the visual feedback necessary to program facial commands into the UAV. The research paper itself, authored by Simon Fraser University’s Jake Bruce, Jacob Perron, and Richard Vaughan, was presented at the IEEE Canadian Conference on Computer and Robot Vision. In what is perhaps the most intriguing part of the paper, the students proposed their long-term goals with the fervor and enthusiasm only found in people pursuing what they truly love. Enjoy.

“While the demonstrations in the paper have sent the robot on flights of 45 meters outdoors, these interactions scale to hundreds of meters without modification. If the UAV was able to visually servo to a target of interest after reaching the peak of its trajectory (for example another person, as described in another paper under review) we might be able to ‘throw’ the UAV from one person to another over a kilometer or more… The long-term goal of this work is to enable people to interact with robots and AIs as we now interact with people and trained animals, just as long imagined in science fiction… Finally, and informally, we assert that using the robot in this way is fun, so this interaction could have applications in entertainment.”